There are only two hard things in Computer Science: cache invalidation and naming things

How many times have you heard this quote? If you work with computers, there’s good chance it’s more than once.

Nobody likes a slow website, right? We like fast, quick, zippy.

To satisfy our need for speed, much of the web content we access every day is served to us via frontend caches. These improve performance, reduce load on web servers and generally provide a much better user experience.

However, there’s a gotcha to all this caching goodness, especially when it comes to updating content. A frontend cache will keep serving the old versions of a page, unless expressly told not to… in Wagtail, as in love, letting go isn’t always easy.

When to call it

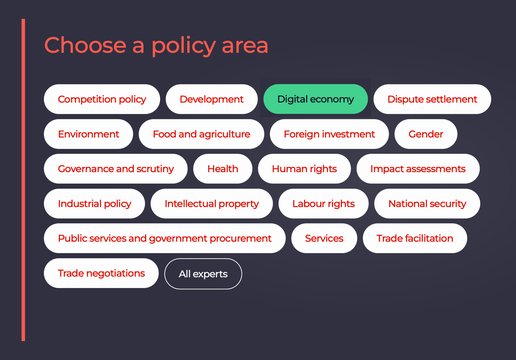

When it comes to letting go of cached page content, the Frontend cache invalidator module allows cache invalidation to be easily set up with a range of technologies such as Azure, Cloudflare, CloudFront, Squid or Varnish.

Once set up, Wagtail will automatically purge the paths of any updated page content by calling the frontend cache for you.

But there’s more...

It also provides hooks for developers, allowing them to set up extra paths to purge via the get_cached_paths method. This is great for purging pages that include pagination or filter parameters such as News or Blog indexes. You can even include wildcards to make the job even easier.

Now, what happens when, say, a News article gets updated, unpublished or even deleted. We’d want those changes to be reflected on all the News listing pages as well.

Wagtail/Django signals makes this easy. We can write a handler that listens for page_published and/or page_unpublished signals and purge all the relevant listing pages.

The structure of each website will be different, it’s unfortunately not a one size fits all solution, so a tailored cache invalidation strategy may need to be developed.

Relationship advice

Wagtail makes it simple to set up related page relationships. I may get a bit technical here, so feel free to skip this section if that’s not your thing.

If the page being edited is referenced on a number of other pages, we’d like to know so we can purge the related page from the cache when the edited page is published.

class RelatedPage(Orderable):

page = models.ForeignKey('wagtailcore.Page', null=True, blank=True, on_delete=models.SET_NULL, related_name='+' )

class Meta:

abstract = True

panels = [PageChooserPanel('page')]

class BlogPageRelatedPage(RelatedPage):

source_page = ParentalKey('blogs.BlogPage', related_name='related_pages')

class NewsPageRelatedPage(RelatedPage):

source_page = ParentalKey('news.NewsPage', related_name='related_pages')By making a few Django queries, we’re able to determine the relationships for the edited page. If you have a RelatedPage base class, it’s fairly simple to then find all the different related page models used across the website, when we need to purge them.

Putting it together

I tend to create a CachePurge app and find all the related page models on startup so we don’t have to determine the related models each time we think about purging the cache.

class CachePurgeConfig(AppConfig):

name = 'myproject.cache_purge'

def __init__(self, app_name, app_module):

super().__init__(app_name, app_module)

self.related_page_models = []

def ready(self):

"""

Gather related page models for use in cache purging later

"""

from django.contrib.contenttypes.models import ContentType

from myproject.utils.models import RelatedPage

models = [ct.model_class() for ct in ContentType.objects.all() if ct.model_class()]

self.related_page_models = [model for model in models if issubclass(model, RelatedPage)]Next, the related page purge method that gets called for each updated page.

from wagtail.contrib.frontend_cache.utils import PurgeBatch

def purge_related_pages(instance, batch):

"""

Purge pages that have a related page relationship with the instance

"""

source_pages = set()

related_page_models = apps.get_app_config('cache_config').related_page_models

for model in related_page_models:

related_pages = model.objects.filter(page=instance)

source_pages.update(

[page.source_page for page in related_pages if hasattr(page, 'source_page')]

)

for page in source_pages:

batch.add_page(page)Finally, the signal handler that fires when pages get published or unpublished.

@receiver(page_published)

@receiver(page_unpublished)

def page_change_handler(instance, **kwargs):

batch = PurgeBatch()

purge_related_pages(instance, batch)

batch.purge()Other methods can be added to this handler, for example to purge parent index pages or search paths.

Snippets, images and documents

What we’ve seen so far covers quite a lot of cases, the final ‘icing on the cake’ is the purging of pages when a Wagtail snippet, image or document changes. A receiver can be created that triggers on post_save and pre_delete signals.

We take advantage of the get_usage method, and purge the pages where the items are used, when they change.

@receiver(post_save)

@receiver(pre_delete)

def change_handler(instance, **kwargs):

"""

Generic receiver for purging pages on which snippets, images and documents are used

"""

if hasattr(instance, 'get_usage'):

usage_pages = instance.get_usage()

if usage_pages:

batch = PurgeBatch()

for page in usage_pages:

batch.add_page(page)

batch.purge()This unfortunately doesn’t currently handle snippets in StreamField blocks, but that looks like it may arrive sometime in the future. In the meantime, there may be other ways to solve that problem with StreamField indexing.

Final piece of the puzzle

We have used these techniques to implement caching for a number of our clients, the performance benefits and improved user experience are clear to see. However, one issue became apparent that impacted the workflow and experience for website editors. The problem was that responses from AWS CloudFront following a cache purge request could be slow, or could fail altogether, if too many requests were made simultaneously.

Multithreading to the rescue

To solve this problem, we took advantage of the concurrent.futures Python module and in particular the ThreadPoolExecutor. We setup a pool of worker threads to execute frontend cache purging calls asynchronously. We could have achieved the same thing with Celery (albeit at the expense of another Heroku worker dyno), but using threads keeps things simple and works great. By submitting requests via our custom backend, we’re able to eliminate the delays site editors experienced when publishing pages and ratelimit the requests to CloudFront that caused it to fail...Tidy!

So is letting go really that hard?

Well, there’s a lot of things to consider when fine-tuning your caching. The benefits of caching are clear – especially for heavily trafficked websites with complex pages. So putting the effort in to making it work well for site visitors and your editorial team is bound to be worthwhile.

If you’re using Wagtail with a caching service, I would say you’re in a really good place.